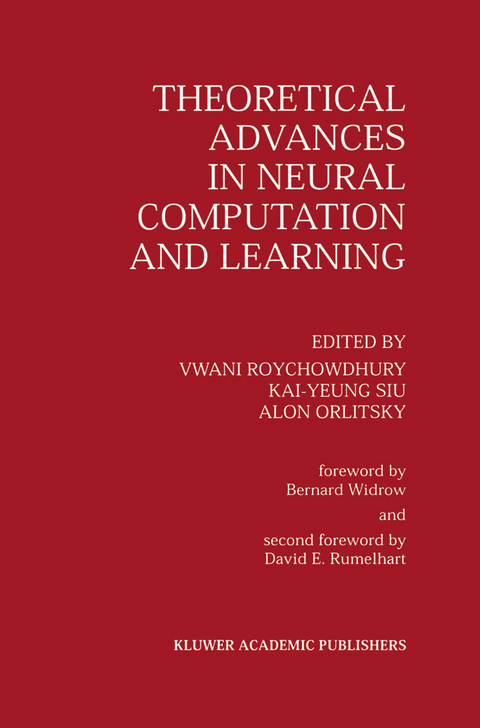

Theoretical Advances in Neural Computation and Learning

Springer-Verlag New York Inc.

978-1-4613-6160-2 (ISBN)

I Computational Complexity of Neural Networks.- 1 Neural Models and Spectral Methods.- 2 Depth-Efficient Threshold Circuits for Arithmetic Functions.- 3 Communication Complexity and Lower Bounds for Threshold Circuits.- 4 A Comparison of the Computational Power of Sigmoid and Boolean Threshold Circuits.- 5 Computing on Analog Neural Nets with Arbitrary Real Weights.- 6 Connectivity Versus Capacity in the Hebb Rule.- II Learning and Neural Networks.- 7 Computational Learning Theory and Neural Networks: A Survey of Selected Topics.- 8 Perspectives of Current Research about the Complexity of Learning on Neural Nets.- 9 Learning an Intersection of K Halfspaces Over a Uniform Distribution.- 10 On the Intractability of Loading Neural Networks.- 11 Learning Boolean Functions via the Fourier Transform.- 12 LMS and Backpropagation are Minimax Filters.- 13 Supervised Learning: can it Escape its Local Minimum?.

| Zusatzinfo | XXIV, 468 p. |

|---|---|

| Verlagsort | New York, NY |

| Sprache | englisch |

| Maße | 155 x 235 mm |

| Themenwelt | Informatik ► Theorie / Studium ► Algorithmen |

| Informatik ► Theorie / Studium ► Künstliche Intelligenz / Robotik | |

| Naturwissenschaften ► Physik / Astronomie ► Theoretische Physik | |

| Naturwissenschaften ► Physik / Astronomie ► Thermodynamik | |

| Technik ► Elektrotechnik / Energietechnik | |

| ISBN-10 | 1-4613-6160-5 / 1461361605 |

| ISBN-13 | 978-1-4613-6160-2 / 9781461361602 |

| Zustand | Neuware |

| Informationen gemäß Produktsicherheitsverordnung (GPSR) | |

| Haben Sie eine Frage zum Produkt? |

aus dem Bereich