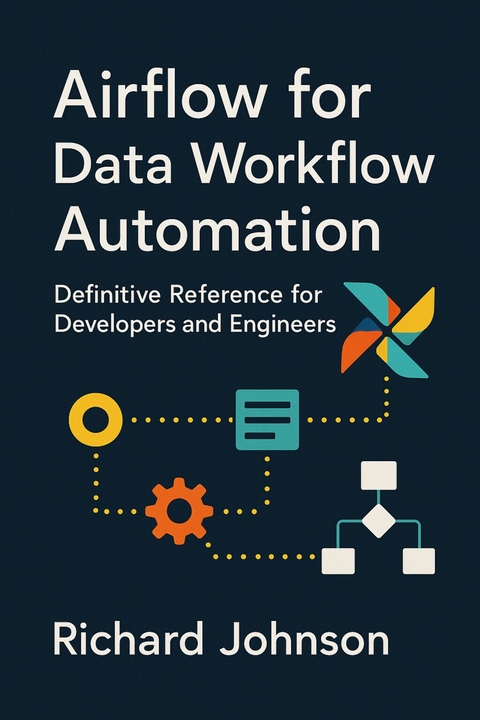

Airflow for Data Workflow Automation (eBook)

250 Seiten

HiTeX Press (Verlag)

9780000807069 (ISBN)

'Airflow for Data Workflow Automation'

'Airflow for Data Workflow Automation' is a comprehensive guide designed for data engineers, architects, and platform specialists seeking to master the orchestration of robust, maintainable, and scalable data pipelines using Apache Airflow. Starting with the foundational principles of modern data workflow automation, the book meticulously explores key architecture concepts, the compelling rationale for orchestration tools, and the core terminology and patterns that underpin Airflow-powered systems. Readers will gain clarity on Airflow's internal mechanics and understand how to leverage its capabilities to efficiently automate common as well as advanced data workflow tasks.

Delving deeper, the book provides actionable insights into authoring, maintaining, and scaling Directed Acyclic Graphs (DAGs) within Airflow environments. It covers best practices in DAG design, dynamic workflow generation, advanced scheduling techniques, and robust testing methodologies. The coverage extends to a thorough exploration of operators, sensors, and Airflow's extensibility for custom integrations and interoperability with external systems-ensuring reliability, idempotency, and efficiency across diverse data operations.

Beyond core orchestration, the book addresses essential enterprise concerns, including security, governance, and observability, with practical guidance on authentication, secrets management, compliance, monitoring, and incident response. It offers proven strategies for cloud, hybrid, and containerized deployments, in addition to advanced topics such as plugin development, UI extension, and workflow versioning. Concluding with forward-looking use cases-ranging from MLOps and streaming pipelines to meta-workflows and community-driven innovation-this book equips professionals with the expertise to harness Airflow as a cornerstone of next-generation data infrastructure.

Chapter 2

Deep Dive into Airflow DAG Design

Mastering DAG design transforms Airflow from a simple scheduler into a flexible automation powerhouse. This chapter guides you through the technical intricacies and advanced patterns that underpin robust, maintainable, and scalable DAGs—arming you with the architectural wisdom needed to automate complex data workflows with precision and confidence.

2.1 DAG Authoring Best Practices

Robust and maintainable Directed Acyclic Graphs (DAGs) represent the cornerstone of scalable workflow orchestration systems. When authoring DAGs, adherence to disciplined design and implementation standards significantly improves reliability, facilitates collaboration, and reduces technical debt over time. Crucial to this endeavor is the thoughtful organization of DAG codebases, clear demarcation of business logic from orchestration instructions, and stringent application of naming conventions and versioning protocols.

A fundamental principle in DAG maintainability is the modular decomposition of code. This begins with a directory structure that enforces the separation of concerns across distinct components: workflow definitions, reusable code modules, and configuration assets. A canonical organization delineates root directories along these lines, sustaining clarity and easing discoverability as illustrated in Figure 2.1 . The top-level dags/ directory contains DAG specification files, each of which should remain concise and focused on orchestration semantics, while isolated modules/ or libs/ folders encapsulate auxiliary Python packages that implement domain-specific utilities, data transformations, or API integrations. Configuration parameters-environment-specific constants, secrets, or resource constraints-reside in dedicated config/ locations, often encoded in YAML or JSON formats to decouple them from executable logic.

This clear partition simplifies reuse and testing by enabling business logic to evolve independently of orchestration mechanics. Encapsulation of data transformation and domain-specific algorithms within importable modules mitigates the risk of embedding complex logic directly within DAG files. Keeping DAGs lightweight exclusively to task composition, scheduling, and dependency declaration enhances readability while facilitating automated static analysis and version control management.

Naming conventions constitute another pillar of effective DAG authoring. Consistent, descriptive nomenclature for DAG identifiers and task IDs directly supports operational traceability and debuggability. Essential best practices include adopting lowercase with underscores for Python file names (e.g., user_ingestion_dag.py), while DAG and task IDs ideally employ concise, hyphenated lowercase strings reflecting both the business domain and functional intent. For instance, a DAG orchestrating daily user ingestion might be named user-ingestion-daily, with tasks named extract-users, transform-users, and load-users. This approach harmonizes with logging systems and monitoring dashboards, enabling intuitive correlation between workflow executions and observed metrics or failures. Embedding explicit metadata within DAG definitions-such as owner contact, version tags, and descriptive docstrings-further enriches operational context.

Idempotence is a critical property to embed at both task and DAG levels, ensuring that workflow retries and partial re-runs do not generate unintended side effects. This requires designing tasks that can be safely re-executed without altering downstream state inconsistently. Common strategies include checkpointing output artifacts in deterministic locations, employing upsert logic for database writes, and conditioning external service calls to detect and gracefully handle duplicates or failures. DAG-level retry policies should be crafted to balance resilience with resource consumption; configuring exponential backoff delays combined with maximum retry limits can ameliorate transient infrastructure issues while avoiding runaway executions. Where tasks inherently involve irreversible side effects, compensating workflows or manual intervention checkpoints should be integrated into the orchestration.

Version control assumes paramount importance in promoting reproducibility and auditability of DAG workflows. All DAG source files, modules, and configurations must be placed under a version control system such as Git, enabling atomic commits, branching strategies, and peer code reviews. It is advisable to maintain separate versioning streams for DAG definitions and associated schemas or datasets when changes affect data contracts. Furthermore, tightly coupling DAG code repositories with Continuous Integration/Continuous Deployment (CI/CD) pipelines enforces correctness through automated linting, testing, and deployment gates. Incremental deployment models, e.g., feature-flagged rollout of new DAG versions, allow safe production migration without service interruptions.

Ensuring code quality extends beyond version control into static analysis and testing frameworks tailored for DAG-centric repositories. Automated linters verify compliance with coding standards and guard against common antipatterns such as unused imports or overly nested blocks. Type checking tools, such as mypy, can be leveraged to detect subtle errors in task parameter passing or module interfaces, which are often the source of runtime failures in dynamically typed languages like Python. Unit tests focus on validating pure business logic encapsulated outside the DAG files, facilitating deterministic execution independent of the underlying orchestration framework. Integration tests, while more complex, are instrumental in verifying end-to-end workflow correctness, often employing mocks or test-specific execution backends. Adopting a systematic testing regime mitigates regressions and promotes confidence during iterative enhancements.

The synthesis of these best practices-modular directory organization, diligent separation of logic and orchestration, consistent naming and metadata conventions, robust idempotent task design, comprehensive version control, and rigorous testing-lays the foundation for scalable and maintainable DAG ecosystems. Figure 2.1 captures this architectural philosophy in a concrete visual schema, illustrating how logical segregation facilitates clarity, reusability, and operational robustness in complex DAG implementations.

2.2 Dynamic DAG Creation and Template Expansion

The creation of directed acyclic graphs (DAGs) in advanced workflow orchestration systems, such as Apache Airflow, often transcends static, manually authored definitions when addressing complex, variable, or large-scale data pipelines. Dynamic DAG generation emerges as a crucial technique to programmatically instantiate and scale workflows, driven by external parameters, configuration data, or systematic logic patterns. This approach is invaluable when the sheer volume or variability of workflow tasks precludes static coding, enabling more flexible, maintainable, and efficient pipeline definition.

The primary principle guiding dynamic DAG creation is that DAG definitions should be generated at parse time in a deterministic, repeatable manner, derived from parameter spaces or metadata repositories. Rather than hardcoding every task and dependency, the system leverages programmatic constructs to instantiate DAG topology according to input configurations. This dynamic instantiation facilitates scaling both vertically (more tasks within a DAG) and horizontally (larger numbers of DAG variants), while maintaining consistent control over execution semantics.

Parameter-driven DAG construction is the foundational mechanism underpinning this flexibility. Instead of embedding task definitions directly in the source code, DAGs are constructed from parameter lists, JSON/YAML configuration files, databases, or other structured inputs. These parameters may encode task identifiers, resource specifications, schedule intervals, or conditional logic that influence task generation and dependency relationships. For example, one might define a cluster of similar ETL workflows by loading schema names and partition identifiers from an external JSON configuration, then programmatically instantiate corresponding tasks that extract, transform, and load data according to those parameters. This decouples workflow specification from the DAG source code and enables external teams or systems to influence pipeline behavior dynamically.

To facilitate the repeated use of shared logic and reduce boilerplate, macro and template expansion patterns have become a common design idiom. These patterns enable encapsulation of common task creation logic into reusable templates or functions, which accept parameters to customize individual DAG and task instances. Template expansion effectively abstracts repeated process fragments-such as a standard data validation step or a notification...

| Erscheint lt. Verlag | 22.5.2025 |

|---|---|

| Sprache | englisch |

| Themenwelt | Mathematik / Informatik ► Informatik |

| ISBN-13 | 9780000807069 / 9780000807069 |

| Informationen gemäß Produktsicherheitsverordnung (GPSR) | |

| Haben Sie eine Frage zum Produkt? |

Größe: 5,2 MB

Kopierschutz: Adobe-DRM

Adobe-DRM ist ein Kopierschutz, der das eBook vor Mißbrauch schützen soll. Dabei wird das eBook bereits beim Download auf Ihre persönliche Adobe-ID autorisiert. Lesen können Sie das eBook dann nur auf den Geräten, welche ebenfalls auf Ihre Adobe-ID registriert sind.

Details zum Adobe-DRM

Dateiformat: EPUB (Electronic Publication)

EPUB ist ein offener Standard für eBooks und eignet sich besonders zur Darstellung von Belletristik und Sachbüchern. Der Fließtext wird dynamisch an die Display- und Schriftgröße angepasst. Auch für mobile Lesegeräte ist EPUB daher gut geeignet.

Systemvoraussetzungen:

PC/Mac: Mit einem PC oder Mac können Sie dieses eBook lesen. Sie benötigen eine

eReader: Dieses eBook kann mit (fast) allen eBook-Readern gelesen werden. Mit dem amazon-Kindle ist es aber nicht kompatibel.

Smartphone/Tablet: Egal ob Apple oder Android, dieses eBook können Sie lesen. Sie benötigen eine

Geräteliste und zusätzliche Hinweise

Buying eBooks from abroad

For tax law reasons we can sell eBooks just within Germany and Switzerland. Regrettably we cannot fulfill eBook-orders from other countries.

aus dem Bereich