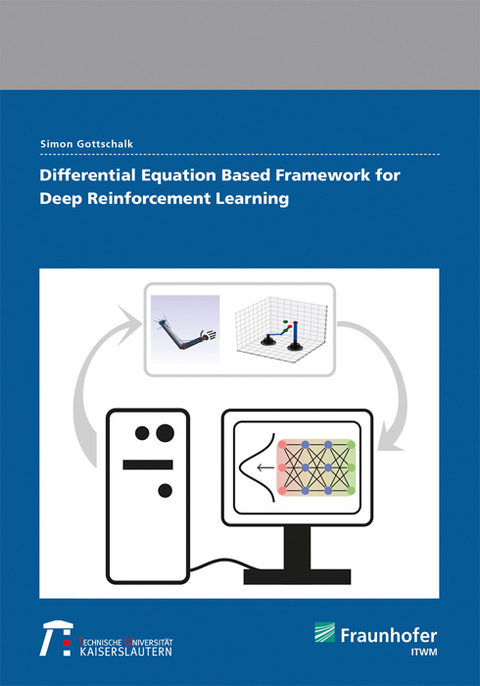

Differential Equation Based Framework for Deep Reinforcement Learning

Seiten

2021

Fraunhofer Verlag

9783839616826 (ISBN)

Fraunhofer Verlag

9783839616826 (ISBN)

In this thesis, we contribute to new directions within Reinforcement Learning, which are important for many practical applications such as the control of biomechanical models. We deepen its mathematical foundations by deriving theoretical results inspired by classical optimal control theory and the connection between neural networks and differential equations. The resulting approach is applied to control complex motions.

In this thesis, we contribute to new directions within Reinforcement Learning, which are important for many practical applications such as the control of biomechanical models. We deepen the mathematical foundations of Reinforcement Learning by deriving theoretical results inspired by classical optimal control theory. In our derivations, Deep Reinforcement Learning serves as our starting point. Based on its working principle, we derive a new type of Reinforcement Learning framework by replacing the neural network by a suitable ordinary differential equation. Coming up with profound mathematical results within this differential equation based framework turns out to be a challenging research task, which we address in this thesis. Especially the derivation of optimality conditions takes a central role in our investigation. We establish new optimality conditions tailored to our specific situation and analyze a resulting gradient based approach. Finally, we illustrate the power, working principle and versatility of this approach by performing control tasks in the context of a navigation in the two dimensional plane, robot motions, and actuations of a human arm model.

In this thesis, we contribute to new directions within Reinforcement Learning, which are important for many practical applications such as the control of biomechanical models. We deepen the mathematical foundations of Reinforcement Learning by deriving theoretical results inspired by classical optimal control theory. In our derivations, Deep Reinforcement Learning serves as our starting point. Based on its working principle, we derive a new type of Reinforcement Learning framework by replacing the neural network by a suitable ordinary differential equation. Coming up with profound mathematical results within this differential equation based framework turns out to be a challenging research task, which we address in this thesis. Especially the derivation of optimality conditions takes a central role in our investigation. We establish new optimality conditions tailored to our specific situation and analyze a resulting gradient based approach. Finally, we illustrate the power, working principle and versatility of this approach by performing control tasks in the context of a navigation in the two dimensional plane, robot motions, and actuations of a human arm model.

| Erscheinungsdatum | 01.03.2021 |

|---|---|

| Zusatzinfo | num., mostly col. illus. and tab. |

| Verlagsort | Stuttgart |

| Sprache | englisch |

| Maße | 148 x 210 mm |

| Themenwelt | Mathematik / Informatik ► Informatik |

| Mathematik / Informatik ► Mathematik ► Angewandte Mathematik | |

| Schlagworte | Applied mathematics • B • Data Scientists • Deep Reinforcement Learning • Fraunhofer ITWM • Informatiker • machine learning • Mathematiker • Necessary Optimality Conditions • neural networks & fuzzy systems • optimal control • Optimization • probability & statistics |

| ISBN-13 | 9783839616826 / 9783839616826 |

| Zustand | Neuware |

| Informationen gemäß Produktsicherheitsverordnung (GPSR) | |

| Haben Sie eine Frage zum Produkt? |

Mehr entdecken

aus dem Bereich

aus dem Bereich

für Ingenieure und Naturwissenschaftler

Buch | Softcover (2024)

Springer Vieweg (Verlag)

CHF 48,95

Buch | Softcover (2025)

Springer Vieweg (Verlag)

CHF 62,95

Buch | Softcover (2025)

Springer Fachmedien Wiesbaden (Verlag)

CHF 69,95